How Curiosity and a Flash Drive Sparked the iOrchestra Revolution

Two Friends, a Slow Laptop, and the Bold Leap to Green AI, Redefining What’s Possible for Datacenters and Autonomous Vehicles

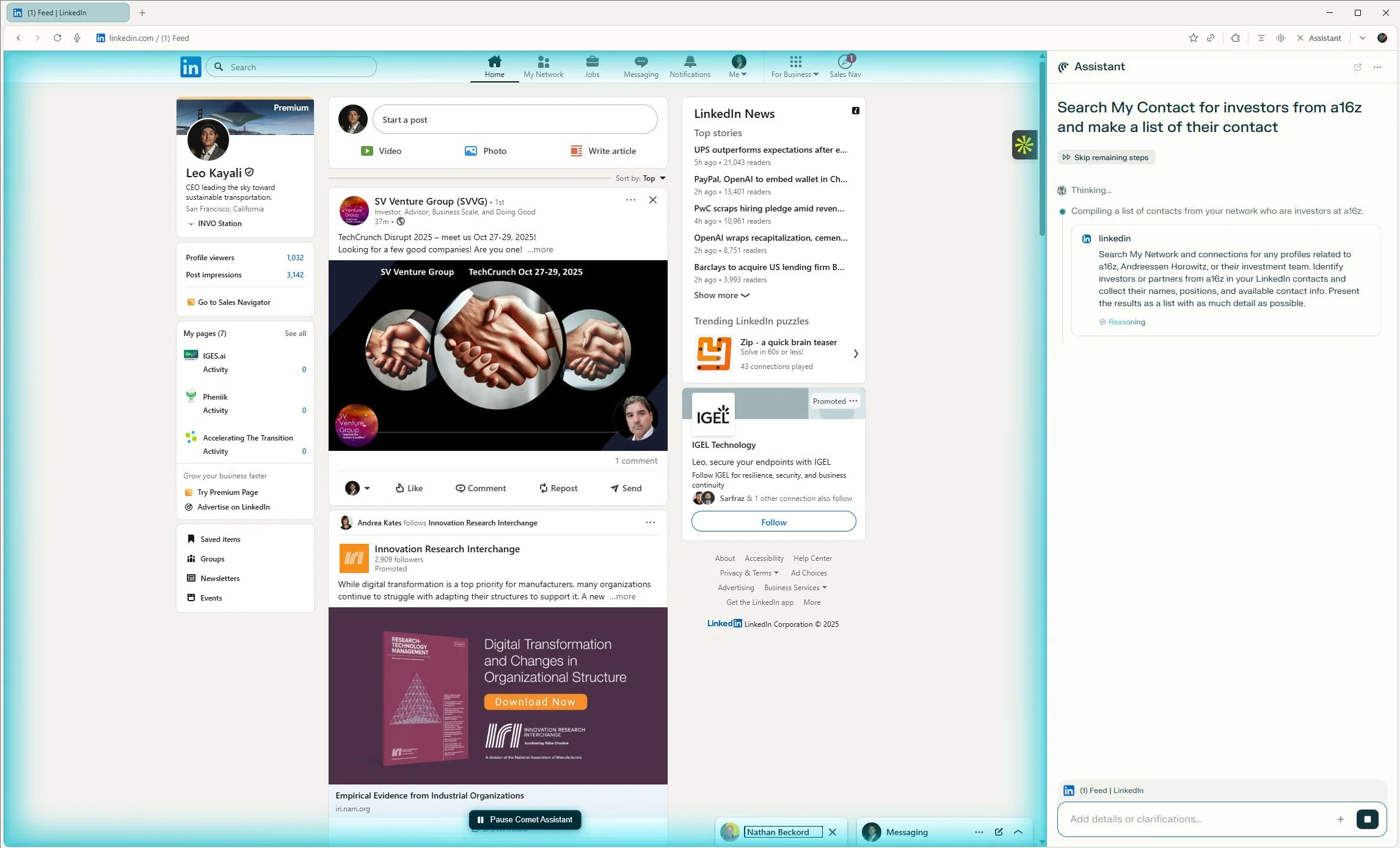

I was working with my buddy Andres at WeWork Embarcadero, right when I got early access to the Comet AI browser from Perplexity. Naturally, I shared the install file on a flash drive so Andres could jump in too, despite not having access, it worked (and we couldn’t stop laughing).

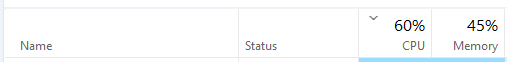

Thanks to my Alienware being in maintenance, I was using an older laptop. Suddenly, everything started moving at a crawl anytime I used Comet for even simple agentic AI tasks. Task Manager revealed a shocker: Comet was eating up 30–40% of my CPU and 4.5–6.5 GB of memory, overclocking like crazy.

Comet Assistant in agentic Action

Hardware resources for the same task

Hardware resources for the same task

That made no sense, so I started tracking exactly how agentic AI tasks were processed. What I found: Comet would scan the screen, take screenshots, convert images to text, process what it found, push that input to the browser, validate with more screenshots, repeat image-to-text, and finally monitor all results until complete. This approach, heavy on image and screen processing rather than direct text, drives resource use through the roof. And it’s not just Perplexity: Gemini, OpenAI, Anthropic, Grok, and Llama have the same issue. Hardware solutions have existed for years, but never inside LLMs.

Then it clicked. Years ago, I helped build SCADA web interfaces to control and monitor hardware. We scraped the webpage to text for direct communication with remote/local hardware, and closely monitored resource usage so everything ran on basic processors, without burning up memory or cooling budgets.

Suddenly, the problem and the solution made sense. Andres was instantly on board. We had an idea: Do it the right way. We tried, and it worked.

Here’s why it mattered: I was building an in-vehicle Jarvis for INVO Station, an AI voice assistant to control everything onboard, with all results on screen. It felt natural and human, and promised huge benefits for scalable flying vehicles.

So, we dove in. Market analysis, TAM, competitor research, and a full business plan, what took six months bootstrapping INVO Station in 2021, we cranked out in a single week using AI for iOrchestra.

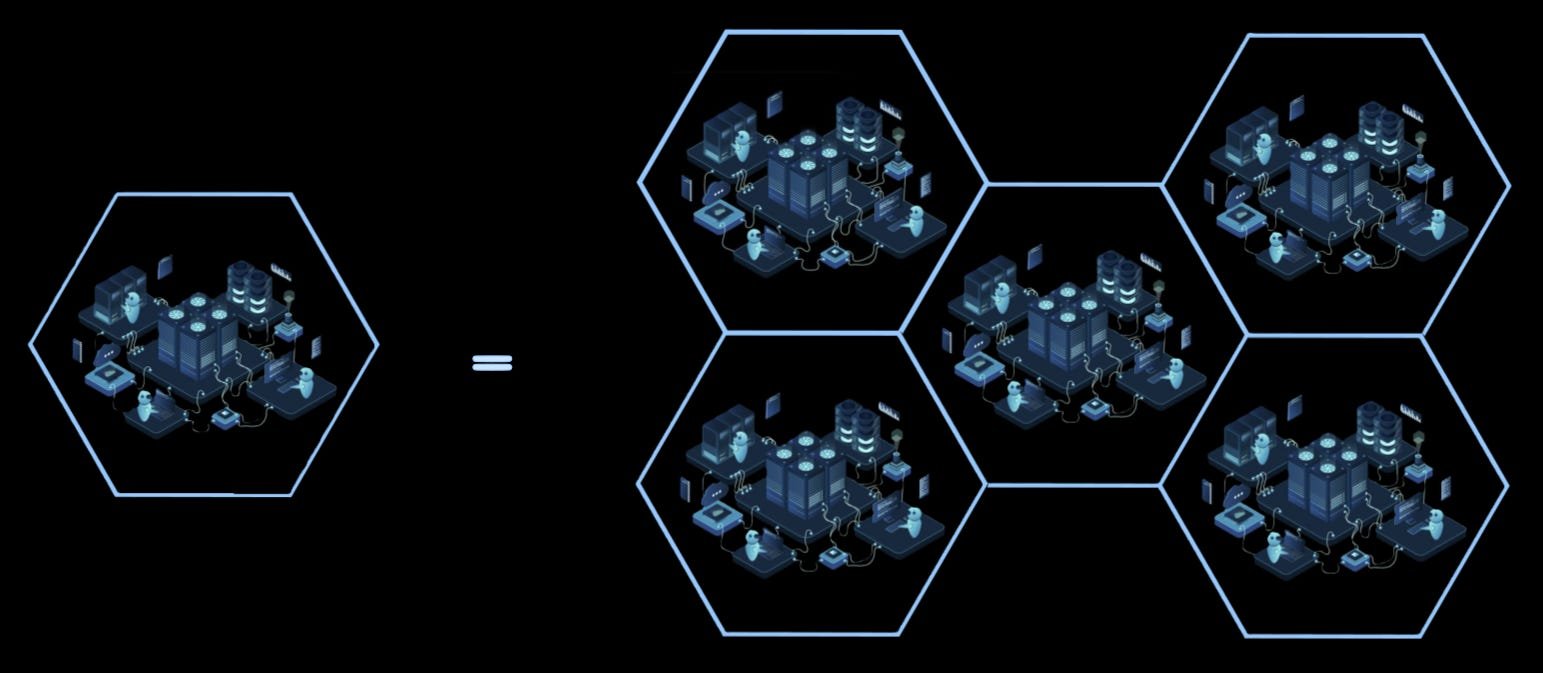

The result? We can run the same agentic tasks with 80% less CPU and memory than other models, a quantum leap for efficiency and sustainability. Less hardware, less power, less cooling, lower electric bills. In fact, the same energy now powers five data centers instead of one, all delivering the same performance.

1 Data Center = 5 Data Centers

That’s massive. Suddenly, AI isn’t just about cool features, it’s about solving the datacenter power crunch restricting global AI growth. We built an MVP, deployed it in INVO Station, and saw game-changing results.

Now, we’re building our own datacenter, proving our approach is better for the planet and fundamentally more efficient. Andres brings the artistic vision, making our company story as unique as our engineering.

We’re here to orchestrate the impossible. Together, unstoppable.

iOrchestra isn’t just the next OpenAI, it’s the product of two friends’ curiosity, a late-night hardware hack, and a passion to reshape AI itself. Now we work, innovate, and have fun, building something better, together.

Curiosity. Collaboration. Commitment to a brighter AI future, that’s the real story behind iOrchestra.